Live Aerial Crowd Detection

Project

This is a solution that does live crowd detection with an aerial vehicle, constructed in 10 weeks. The system takes a live feed from an aerial platform, either a plane or a drone, and uses a neural network to perform object detection on the video.

These detections are then plotted as geographic coordinates using the vehicles telemetry to place the objects in the world. This data allows the system to perform detection, crowd tracking, and feedback control based on almost any object of interest. All of this is done live, which is much more challenging, and useful, than recording data for analysis later.

Videos

Videos

The first video shows the performance of the basic YOLO model in detecting people and cars. While it is quite good, the second video containing results from the trained model shows a much better rate of detecting objects at odd or higher angles.

Training

While the default model worked well so long as the altitude was relatively low and camera angles were relatively minor, performance gets dramatically worse with altitude. This necessitated training the model using thousands of high angle photos of people, cars, buses, and bicycles.

This data set was used for training, and while the classifications are a huge improvement over the basic behavior, even better results could be achieved by training the model with images from the specific hardware of this project.

Method

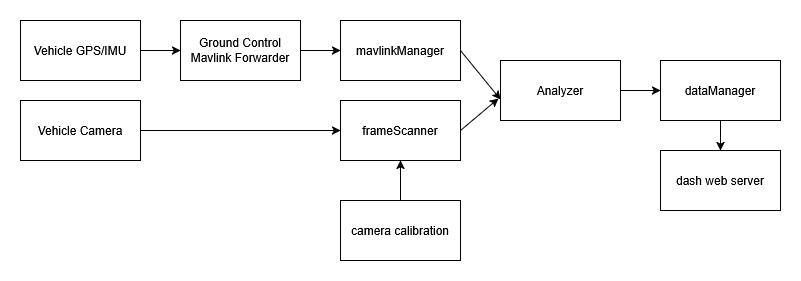

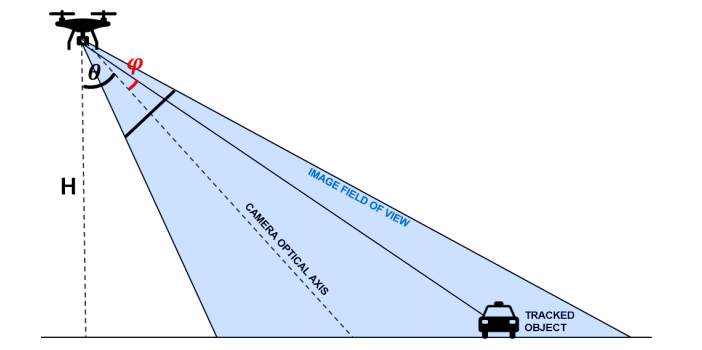

The first part of the data pipeline is sourced from aerial footage streamed directly to a ground station alongside the telemetry of the vehicle. Both a plane and a quadrotor were built for this project, and while the plane is a better fit for operating time and range, the quadrotor is easier to collect stable data with. This data is then processed using a YOLO11 model trained on high angle security footage. The telemetry of the vehicle is then used to calculate the ground sample distance in order to get an approximation for where the objects that were detected in the camera frame are in relation to the vehicle.

In practice, this calculation proved to be one of the most diffuctult parts of this project as it is very sensative to transmission lag, noise, and small rounding errors.

Credit to Navaneeth Balamuralidhar, Sofia Tilon, and Francesco Nex for this figure.

Once this is done, the locations of the objects can be transformed into geographic coordinates using the vehicles latitude and longitude as provided by GPS. With this data, the objects can be grouped using a DBSCAN (Density-Based Spatial Clustering of Applications with Noise) algorithm. After groups are formed, a convex hull is computed around the members of a group and drawn.

These object coordinates are constantly updated as new data is collected, allowing data analysis to be conducted live. This means that the control for the vehicle can be updated as detections are made, and that crowds and groupings can be evaluated as they form. The neural network used for this project is also very adaptable, and so long as the training data exists, this same system can be used to track people, cars, animals, or anything of interest.